Have you ever noticed how a single NGINX instance can handle multiple concurrent connections at the same time? Event driven architecture is one of the crucial components. NGINX can efficiently handle millions of concurrent connections through its non-blocking, event driven architecture. This architecture optimizes resource overhead and boosts scalability. It can seamlessly handle huge traffic and utilize the CPU and memory resources efficiently.

To better understand this, let’s first know what NGINX actually does.

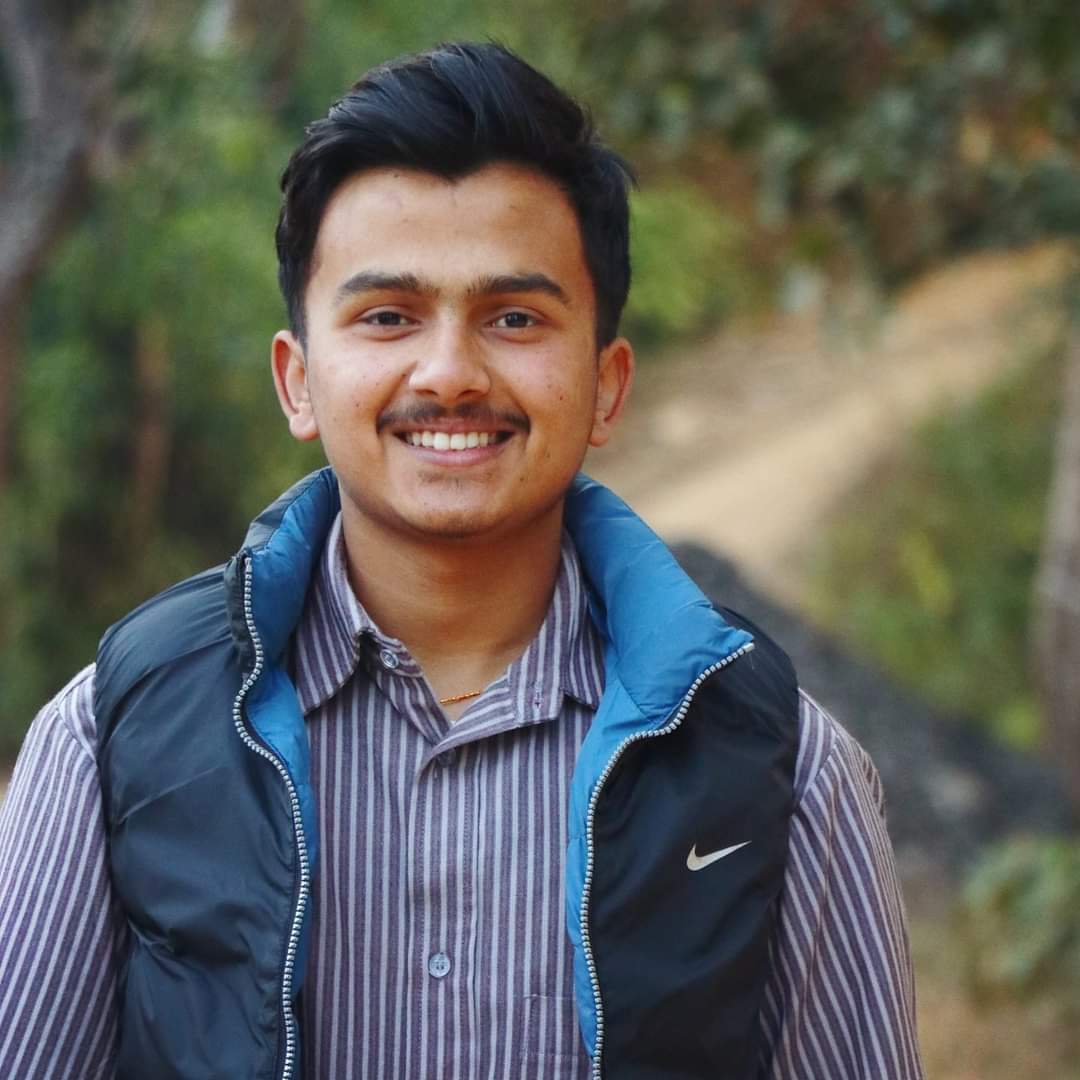

NGINX is an open source reverse proxy server for HTTP, HTTPS, SMTP, POP3 and IMAP protocols as well as a load balancer, HTTP cache, and a web server.

NGINX acts as an intermediary between client and the web services by handling client’s requests and routing it to the backend web service. It is also called reverse proxy and it can work as load balancer among multiple backend servers.

It performs all the necessary work such as SSL termination, rate limiting, caching, configuration management etc. The web service can be scaled easily because all the logic is centralized in NGINX.

The following figure shows the flow of NGINX:

How does NGINX handle connections?

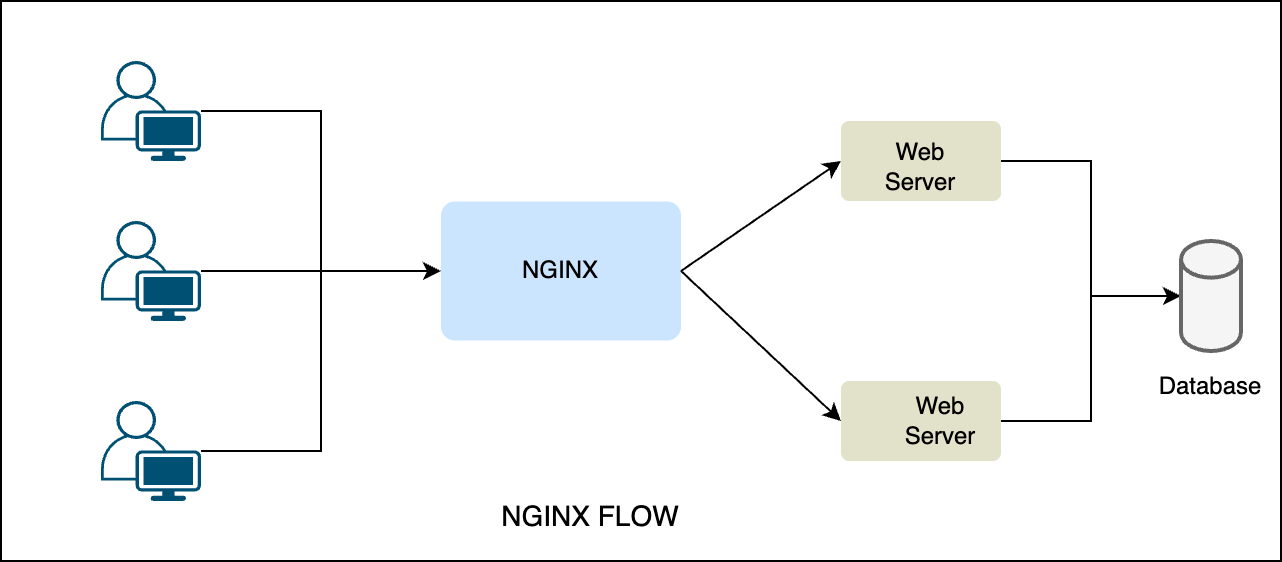

When a web server starts, it calls the operating system and passes the port on which it listens.

For example: a web server would pass 80 (http) or 443 (https) to listen.

The magic begins here, the OS’s kernel stack performs a TCP handshake and establishes a connection. The OS assigns a file descriptor or a socket (communication protocol that enables client and server) for each connection.

The following figure describes this flow in general:

The above architecture describes more about the client server connection flow.

NGINX Architecture

Before diving further, lets know about architecture of NGINX.

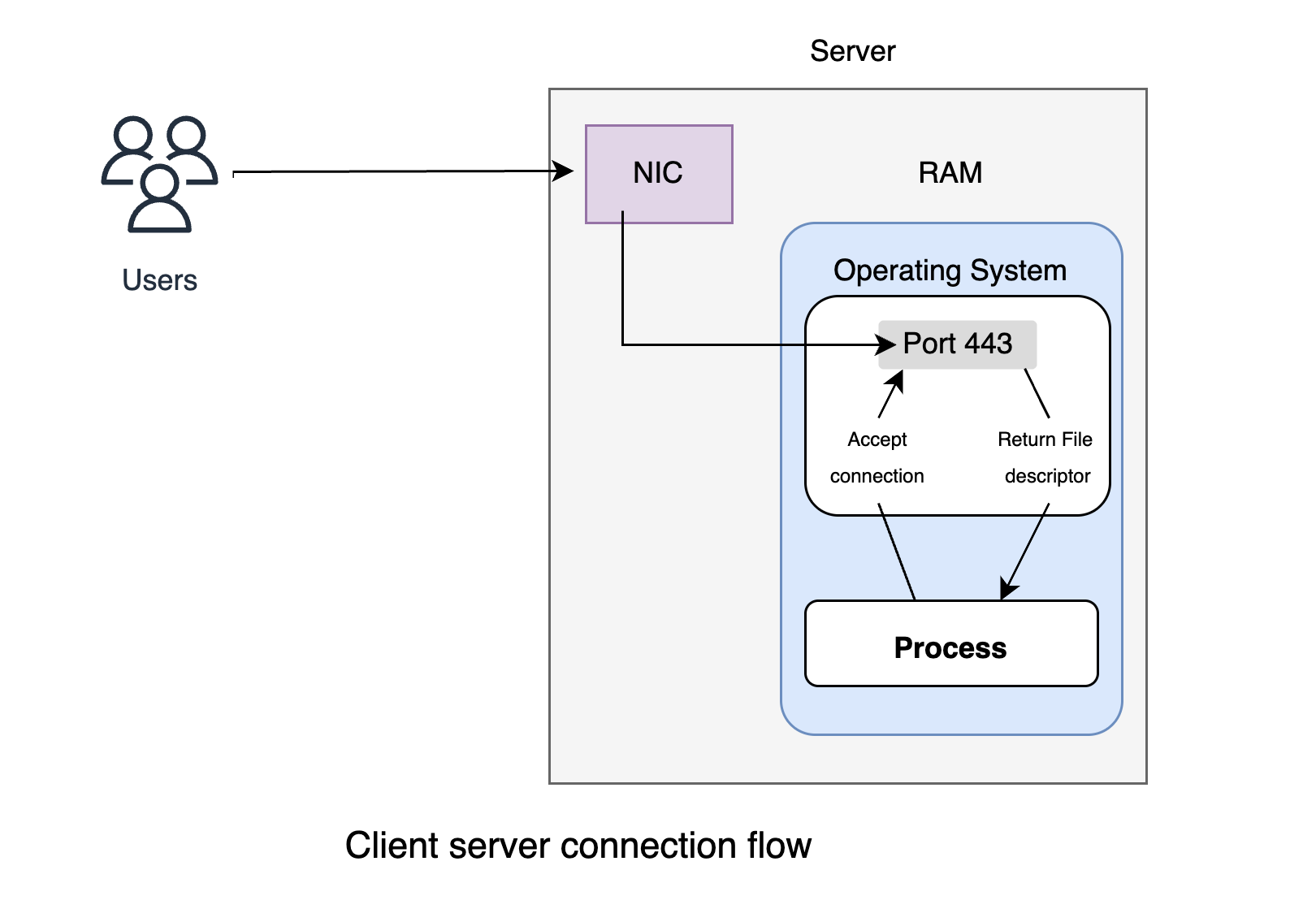

NGINX follows the master-slave architecture by supporting the event-driven asynchronous and non-blocking model.

Master

As mentioned above NGINX follows the master slave architecture. It allocates the jobs for the workers as per the request from the client. After job allocation to workers, the master looks for the next request from the client and does not wait for the response from workers. Once the response comes from workers , the master sends the response to the client.

It manages configuration reloads, binds ports and spawns the worker process as well.

Workers

Workers are slaves in the NGINX, which responds to master. Each worker can handle more than 1000 requests at a time in a single-threaded manner. Once the process is completed, the response is sent to the master. The single thread saves the RAM and ROM size by working on the same memory space instead of different memory spaces. The multi thread works on different memory spaces. Each worker operates a single-threaded, non-blocking event loop pinned to a CPU core, avoiding context-switching overhead.

Cache

NGINX cache is used to render the web page quicker by getting from cache memory instead of getting from the server. The pages are stored in cache memory on the first request. Cache manager handles the caches management. Each worker runs an event loop, leveraging epoll or kqueue to monitor sockets for events such as new connections or data transmission.

Event Loop

NGINX uses OS specific mechanisms like epoll (Linux) or kqueue (FreeBSD) to monitor thousands of sockets for I/O events (new connections, incoming data). Events trigger callbacks, allowing workers to process multiple requests concurrently without blocking

// event loop flow

while (true) {

events = get_events(); // e.g., epoll_wait()

for each event in events {

process_event(event); // Handle read/write or new connection

}

}

Handling Concurrent Connections

NGINX’s ability to handle millions of concurrent connections is rooted in its efficient resource management:

- Non-Blocking I/O: Each connection is processed asynchronously, allowing the worker to handle multiple connections without waiting for I/O operations. This enables a single worker to manage a huge number of concurrent connections.

- Low Memory Overhead: Each connection typically requires 100KB to 1MB of memory, depending on its state. This low footprint, combined with OS kernel tuning, allows NGINX to scale connections without exhausting resources.

- Scalability: With multiple workers (one per CPU core), NGINX can handle multiple connections. This contrasts sharply with traditional models: Process-Per-Request is limited by memory, with a theoretical cap of 320 connections on 32GB RAM (100MB/process). Thread-Per-Request suffers from context switching and queue waiting, reducing efficiency under high load.

The given table explains it better:

| Model | Resource Usage | Scalability |

|---|---|---|

| Process-per-request | High | Low |

| Thread-per-request | Moderate (context switching overhead) | Moderate (Limited by Threads) |

| NGINX Event Driven | Low | High |

Performance Benefits

NGINX’s event-driven architecture offers significant performance advantages:

- Reduced Context Switching: Single-threaded workers pinned to CPU cores eliminate the need for context switching, reducing CPU usage and improving throughput. This is a key factor in its ability to handle high request rates without degradation.

- High Throughput: It can process up to 100,000 requests per second per worker, depending on hardware and configuration.

- Scalability Without Degradation: As user numbers grow, NGINX maintains performance due to efficient resource use. Compute operations take microseconds, further enhancing throughput.

- No Downtime for Updates: Configuration updates and binary upgrades can occur without downtime, ensuring continuous operation.

Scaling with NGINX

NGINX scales both horizontally and vertically:

-

Horizontal Scaling: It acts as a load balancer, distributing traffic across backend servers using strategies like:

- Round-robin: Evenly distributes requests.

- Least Connection: Routes to the server with the fewest active connections.

- Hash: Uses client IP (Hash IP) or other parameters (generic hash) for consistent routing.

- Least Time: Sends to the server with the lowest average latency.

- Random: Distributes requests randomly.

-

Vertical Scaling: On a single server, NGINX leverages multiple CPU cores, with each core running a worker process. This allows it to handle traffic spikes and handle millions of concurrent connections. System tuning, such as adjusting kernel parameters, further enhances scalability, ensuring NGINX can handle high-concurrency scenarios effectively.

Conclusion

NGINX’s event-driven, non-blocking architecture is the cornerstone of its ability to handle millions of concurrent connections. By leveraging asynchronous I/O, single-threaded workers, and efficient event handling, it minimizes resource usage and maximizes performance. Compared to traditional process- or thread-based models, NGINX offers better scalability, handling up to 100,000 requests per second per worker and scaling to millions of connections with minimal overhead. Its real-world adoption by companies like Netflix and Dropbox underscores its effectiveness, making it an essential tool for modern web infrastructures.

.…………

Looking to scale your infrastructure or optimize NGINX for high traffic? Our experts are here to help! Book a free consulting call with Gurzu DevOps and cloud engineering experts.

Gurzu is a software development company passionate about building software that solve real-life problems. Explore some of our awesome projects in our success stories.

Have a tech idea that you need help turning into reality? Book a free consulting session with us!